ICYMI Eliezer Yudkowsky, a self-taught artificial intelligence decision theorist (whatever that is) got the tech world in a tizzy by suggesting that a proposed six-month pause in AI development is not enough and we should shut it all down now or “literally everyone on Earth will die.”

He sees this not as a possibility but as the most likely outcome.

“Many researchers [expect] that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die. Not as in ‘maybe possibly some remote chance,’ but as in ‘that is the obvious thing that would happen.'”

Yudkowsky concludes that we must “Shut it all down,” and pronto. “We are not ready,” he says. “We are not on track to be significantly readier in the foreseeable future. If we go ahead on this everyone will die, including children who did not choose this and did not do anything wrong.”

There he goes again with that “everyone will die” mantra. I counted at least five such warnings. Think he’s trying to tell us something? Of course the piece was published in Time and the dude’s from Berkeley, which may explain some things.

Keep in mind that Yudkowsky’s views on AI are simpatico with the thinking of Oxford philosopher Nick Bostrom, who first postulated that this is not reality and we are not human but code inside a computer simulation designed by a master race of futuristic posthumans, whatever they are.

So there is that.

Of course the recent hysteria over AI was prompted by the uncanny work of OpenAI’s ChatGPT, which has wowed the world with its writing and testing prowess. But here’s the thing. Ability to answer questions logically and write eloquent prose does not mean it’s intelligent or even capable of thought.

A couple of years ago I had the opportunity to chat with a well-known pioneer in the AI field known as natural language processing or NLP, who explained what led to recent advances in computers’ ability to understand human language and respond to inquiries in kind.

Make no mistake, that’s all ChatGPT is. What it does is accept an inquiry, access enormous amounts of data in the cloud, come up with the best possible answer, and synthesize a response using proper language composition, grammar and syntax.

Think of it as souped up search capability combined with natural language synthesis.

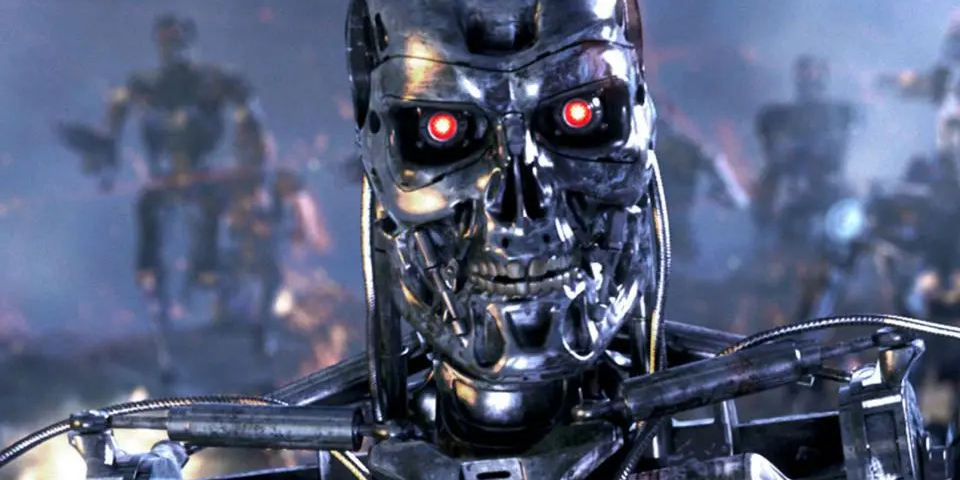

Granted, GPT is very good at what it does, which is why search companies Google and Microsoft are going at it big-time, but that’s a long, long way from AI becoming sentient and creating an army of terminators to hunt down and destroy the human race.

The other problem with Yudkowsky’s plea to shut down all AI development on Earth is that it’s ludicrous. We live in a competitive and dangerous world. If the specter of nuclear, biological and environmental holocaust couldn’t get everyone to cooperate or at least behave themselves, the sort of nebulous notion of mass extinction by computer code isn’t going to move the needle either.

There are plenty of things to be concerned about these days. Rest assured that AI isn’t one of them.